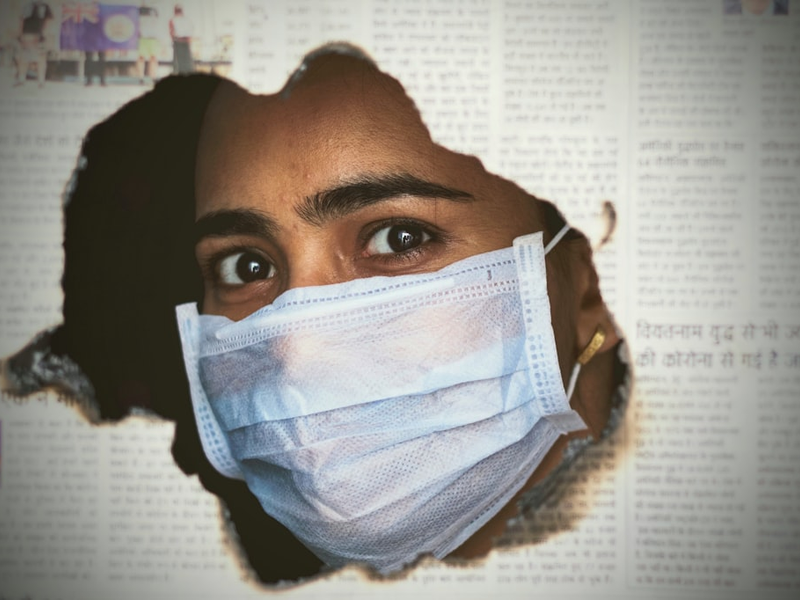

AI Is Totally Gaslighting Women and People of Color's Health Concerns

Photo by Himanshu Pandey on Unsplash

Silicon Valley’s latest tech “innovation” is proving to be another spectacular fail in the ongoing saga of systemic bias. AI medical diagnostic tools are revealing a disturbing trend: they’re consistently downplaying and minimizing symptoms reported by women and people of color.

Imagine pouring your heart out about persistent pain or troubling health symptoms, only to have a robotic algorithm essentially tell you, “It’s all in your head”. Welcome to the dystopian reality of AI healthcare in 2025.

The Algorithmic Discrimination Playbook

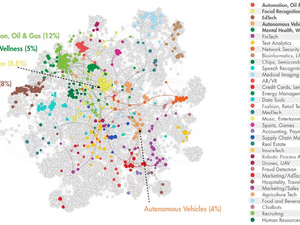

Researchers have uncovered that machine learning models are perpetuating centuries-old medical biases by training on historically skewed datasets. These algorithms learn from medical records that have traditionally centered white, male patient experiences, effectively rendering everyone else’s health narratives invisible.

Tech’s Ongoing Diversity Problem

This isn’t just a medical issue - it’s a glaring reflection of tech’s persistent diversity crisis. When AI development teams lack representation, the systems they create inevitably encode their own unconscious biases into supposedly “neutral” technologies.

What Now?

As tech continues to infiltrate healthcare, we need radical transparency and diverse teams driving algorithmic development. Medical AI must be rebuilt from the ground up with intentional, intersectional perspectives that center marginalized experiences.

AUTHOR: mp

SOURCE: Financial Times