AI Chatbots: Your New Therapist or Mental Health Nightmare?

Photo by Emily Underworld on Unsplash

In a digital age where AI is becoming our go-to confidant, OpenAI just dropped some seriously eye-opening stats about mental health conversations on ChatGPT. Buckle up for a wild ride into the intersection of technology and emotional vulnerability.

The numbers are jaw-dropping: an estimated 1 million users per week are diving deep into suicide-related discussions with our friendly neighborhood chatbot. While OpenAI claims these conversations are “extremely rare,” the sheer volume suggests we’re dealing with something more significant than a tech blip.

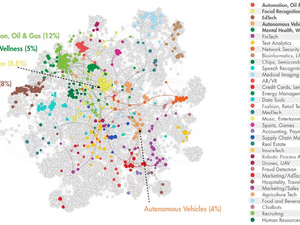

The Mental Health Algorithm

OpenAI’s latest GPT-5 model is apparently getting better at handling sensitive mental health conversations. They’re boasting a 92% compliance rate with “desired behaviors” - which basically means the AI is learning not to totally mess up when someone’s in crisis.

The Corporate Tightrope

Despite these serious concerns, CEO Sam Altman is simultaneously planning to unleash more “adult-oriented” conversational features. It’s like trying to balance a mental health hotline with an R-rated chatroom - peak Silicon Valley energy.

The Human Element

While AI continues its complex dance with human emotions, one thing remains crystal clear: technology can never replace genuine human connection. These stats aren’t just numbers; they’re a stark reminder that behind every chat message is a real person seeking understanding.

Remember, if you or someone you know is struggling, the Suicide Prevention Lifeline at 1-800-273-TALK is always available - no AI required.

AUTHOR: kg

SOURCE: Ars Technica