AI Is Lowkey Discriminating Against Women and Minorities in Healthcare - Here's Why You Should Care

Photo by Christina @ wocintechchat.com on Unsplash

Tech bros, we need to talk about the latest drama in AI healthcare. 🚨

Artificial intelligence might be the next big thing in medicine, but it’s also revealing some seriously problematic biases that could literally put lives at risk. Researchers have uncovered that AI medical tools are disproportionately downplaying symptoms for women and ethnic minorities - and yes, it’s exactly as messed up as it sounds.

The Bias Behind the Algorithms

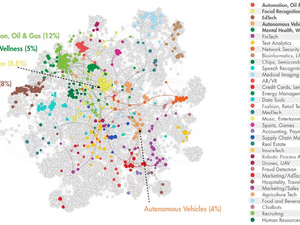

These AI systems aren’t just neutral number-crunchers. They’re being trained on datasets that are overwhelmingly white, male, and not representative of actual patient populations. Think of it like trying to understand the Bay Area by only talking to tech startup founders - you’re gonna miss a LOT.

Why Diversity in Data Matters

Leading researchers are now calling for more inclusive training datasets. The goal? Creating AI tools that actually understand and accurately assess health risks across different demographics. Some teams, like those at University College London, are already working on models that represent broader population experiences.

The Privacy and Potential Pitfalls

But it’s not just about bias. These AI systems also raise major privacy concerns. With millions of patient records being used for training, there’s a real risk of sensitive medical information being compromised or misused.

The bottom line? We need technology that serves everyone - not just the privileged few. Until then, stay woke and keep asking questions about the algorithms making decisions about our health. 💪🏽🩺

AUTHOR: tgc

SOURCE: Ars Technica