AI's Magic Trick: How Tiny Models Are Stealing the Tech World's Spotlight

Photo by Steve Johnson on Unsplash

Tech nerds, gather 'round for a mind-blowing revelation that’s about to turn the AI world upside down. Forget those massive, energy-guzzling AI models that cost more than your entire tech startup – we’re talking about a game-changing technique called “distillation” that’s making big tech companies sweat.

Back in 2015, some genius researchers at Google, including the AI rockstar Geoffrey Hinton, stumbled upon a wild idea. Imagine taking a massive, complicated AI model and shrinking it down to a lean, mean learning machine without losing its intelligence. Sounds like tech magic, right?

The Secret Sauce of AI Downsizing

Here’s the genius part: instead of treating all wrong answers as equally terrible, these researchers discovered a way to transfer “dark knowledge” from a big “teacher” model to a smaller “student” model. Think of it like an AI mentorship program where the big brain teaches the little brain not just what’s right, but the nuanced shades of being wrong.

From Expensive Giants to Nimble Performers

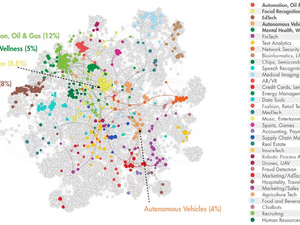

This technique isn’t just theoretical anymore. Companies like Google, OpenAI, and Amazon are now using distillation to create smaller, cheaper AI models that can perform just as well as their massive counterparts. In one mind-blowing example, researchers at UC Berkeley created a reasoning model for less than $450 that competed with much larger models.

The Future is Lean and Mean

What does this mean for tech? Smaller AI models that are cheaper to train, more energy-efficient, and potentially accessible to more people and companies. It’s like democratizing AI, one tiny model at a time. The tech world might never be the same again.

AUTHOR: kg

SOURCE: Wired